- Home

- Internet of Things (IoT)

- Internet of Things Blog

- Azure Percept - First Look

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

A few weeks back I was lucky enough to get my hands on the brand new Azure Percept. Us UK folks have been waiting patiently for the release date, but thanks to some behind the scenes work from the IoT Team, both Cliff Agius and myself were lucky enough to be given a pair of development systems to play with and write content around.

This post is mainly my first impressions of the kit we received after spending some time unboxing it on our live Twitch Show - IoTeaLive.

What is the Percept?

The Azure Percept is a Microsoft Developer Kit designed to fast track development of AI (Artificial Intelligence) applications at the Edge.

After you've unboxed the Percept, you'll see the three primary components of the Carrier Board, the Eye or Vision Module and the optional Ear or Audio Module.

This kit looks fantastic in silver, and I believe the production version will be black, which also looks great from the images I've seen.

What are the Specs?

At a high level, the Percept Developer kit has the following specs;

Carrier (Processor) Board:

- NXP iMX8m processor

- Trusted Platform Module (TPM) version 2.0

- Wi-Fi and Bluetooth connectivity

Vision SoM:

- Intel Movidius Myriad X (MA2085) vision processing unit (VPU)

- RGB camera sensor

Audio SoM:

- Four-microphone linear array and audio processing via XMOS Codec

- 2x buttons, 3x LEDs, Micro USB, and 3.5 mm audio jack

Who's it aimed at?

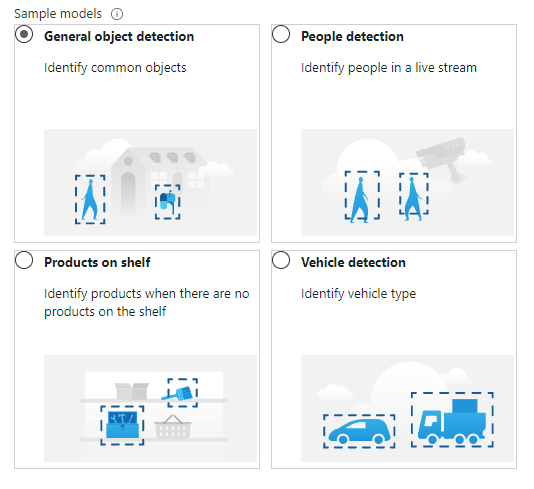

The Percept is currently aimed a few target markets. We can see this when we start looking at a few of the models we can deploy;

These models can be deployed to the percept to quickly configure the Percept to recognise objects in a set of environments, such as;

- General Object Detection

- Items on a Shelf

- Vehicle Analytics

- Keyword and Command recognition

- Anomaly Detection etc

Azure Percept Studio

Microsoft provide a suite of software to interact with the Percept, centred around Azure Percept Studio, an Azure based dashboard for the Percept.

Once you've gone through the setup experience, you're taken to Percept Studio to start playing with the device.

Percept studio feels just like most other parts of the Portal and is broken down into several main sections;

Overview:

This section gives us an overview of Percept Studio, including;

- A Getting Started Guide

- Demos & Tutorials,

- Sample Applications

- Access to some Advanced Tools including Cloud and Local Development Environments as well as setup and samples for AI Security.

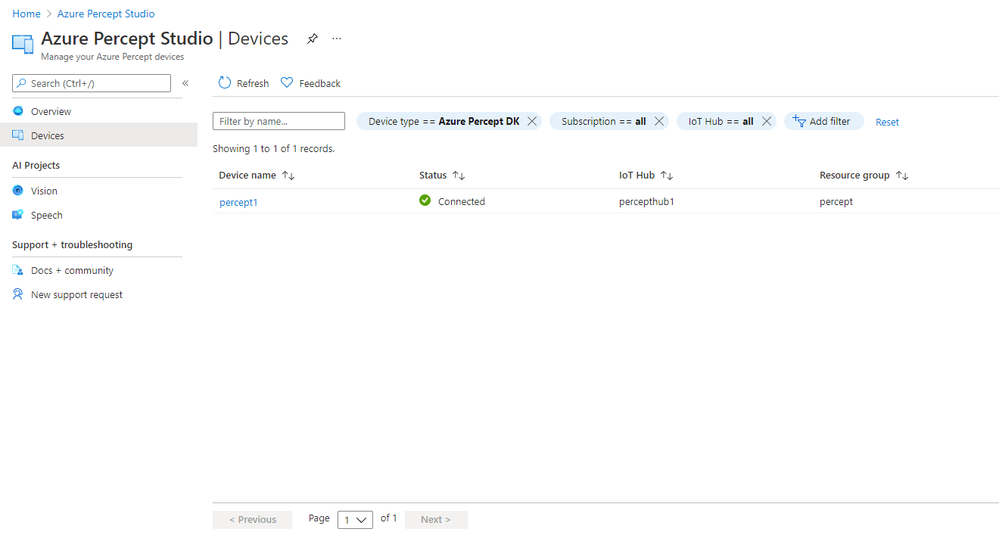

Devices:

The Devices Page gives us access to the Percept Devices we’ve registered to the solution’s IoT Hub.

We’re able to click into each registered device for information around it’s operations;

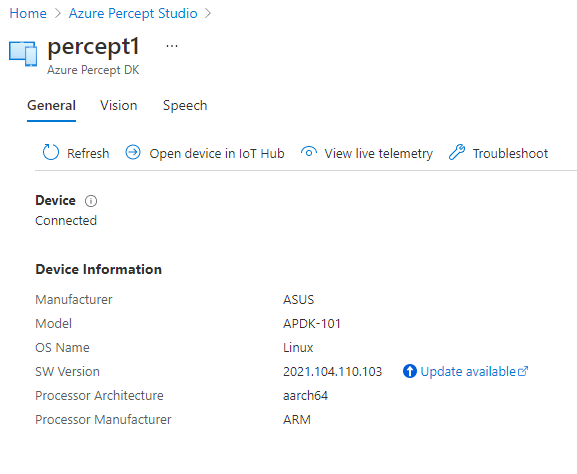

This area is broken down into;

- A General page with information about the Device Specs and Software Version

- Pages with Software Information for the Vision and Speech Modules deployed to the device as well as links to Capture images, View the Vision Video Stream, Deploy Models and so on

- We’re able to open the Device in the Azure IoT Hub Directly

- View the Live Telemetry from the Percept

- Links with help if we need to Troubleshoot the Percept

Vision:

The Vision Page allows us to create new Azure Custom Vision Projects as well as access any existing projects we’ve already created.

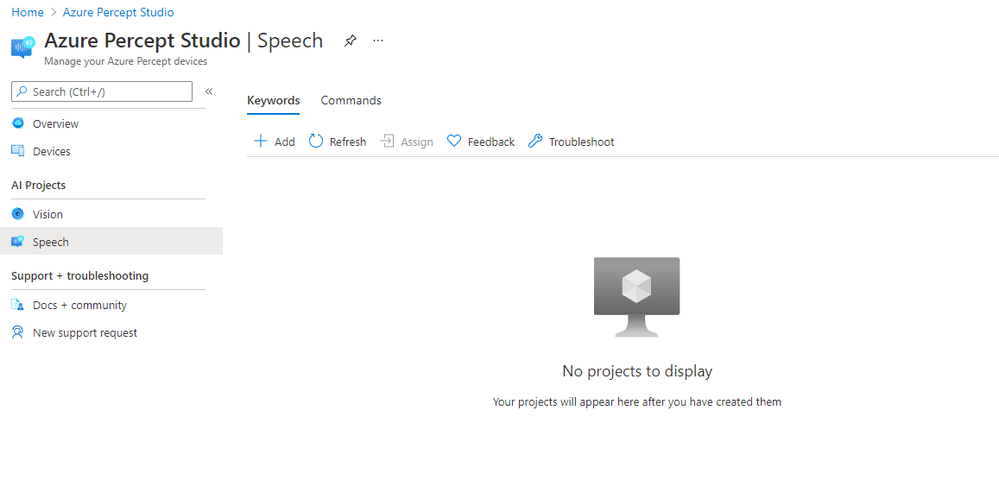

Speech:

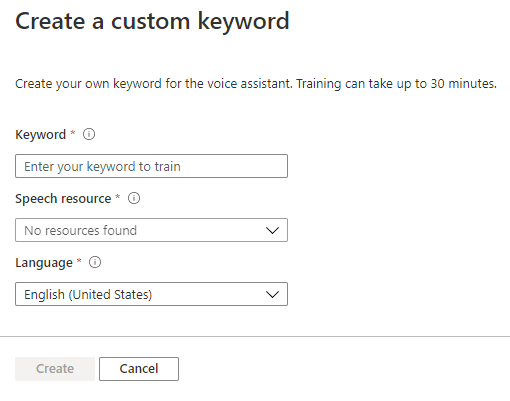

The Speech page gives us the facility to train Custom Keywords which allow the device to be voice activated;

We can also create Custom Commands which will initiate an action we configure;

Percept Speech relies on various Azure Services including LUIS (Language Understanding Intelligent Service) and Azure Speech.

Thoughts on the Vision SoM

During the Twitch stream with unboxing and first steps we did, we had a play mainly with the Vision SoM. I already had a really old CustomVision.ai model setup and trained from a talk I gave at the AI Roadshow a year or so ago.

This project was an image classification project, which was demoing the MIT image set called ObjectNet. This is a set of images with items in unusual orientations and locations, designed to fool AI. I was showing how a Hammer on a bed could be confused for a screwdriver by AI;

It took a while for me to realise that I needed to create a new project to see the Percept Vision in full, where this model was of course only returning one object at a time and wouldn't let me identify various objects in a single image;

The process of tagging and categorising images was very easy, if not a bit laborious, given that I need to at least 15 of each category to begin the auto training. But, once you have enough images, the process works a bit faster.

Plans

Now that I've had some time to play with the basics, my next port of call is the Audio SoM... I've played with Alexa Skills in the past, so I'm interested to see how the Speech Projects work in comparison. It'd be great to integrate other Azure Services as well as falling back to my mainstay of IoT Hub to perhaps drive some IoT workloads - Perhaps some home automation!

Onwards from that, I've spoken to a few clients, one in particular is in the ecology and wildlife ecosystem, and they are really interested in whether we can use the Percept to help in Human and Animal Conflict situations perhaps... Definitely a great use case!

Where can I get some more information?

- Cliff Agius has created an excellent blog post around his first impressions of the Azure Percept Developer Kit…. You can find that here

- Myself, Cliff Agius, Mert Yeter and John Lunn recorded a Percept Special IoTeaLive show of the unboxing and first steps on the AzureishLive Twitch Channel.

- Official Azure Percept Page

- Azure Percept MS Docs

- The Azure Percept YouTube Channel has some fantastic videos around the Percept

- Olivier Bloch from the Microsoft IoT Team has hosted some great IoT Show Azure Percept Shows

Thanks

Thanks to the whole IoT team for letting us have the Percept kits so we can start playing with them. Thanks too to the ace Cliff Agius for brining me mine back all the way from Seattle!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.