- Home

- Azure Data

- Azure Synapse Analytics Blog

- How to query your Delta Lake with Azure Synapse SQL pool

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Querying Delta Lake files using T-SQL in Azure Synapse Analytics is now generally available. In this blog post, you will learn how to use serverless SQL pool and T-SQL language to analyze your Delta Lake files from your Azure Synapse workspace.

Who this is for?

There are two primary roles that will benefit from this blog post.

Your query language of choice is SQL and you use reporting, analytics, and business intelligence tools like Power BI to empower business outcomes with data insights. You need to access Data Lake from the reporting tools using some SQL connection.

Your go-to framework of choice is Apache Spark. You work in work in multiple environments to make data systems operational. You oversee getting data into a consumable format for your organization to extract value from. You need to enable business analysts to access data that you produce using SQL-like abstractions.

Sharing data today

Organizations today have sophisticated data teams that use various sources, devices, and applications. They also have a need to share the data between producers and consumers/analysts. The data lake concept enables various teams and tools to seamlessly share data. Data lakehouse enables you to manage and analyze your data stored in the data lake and still keep your data shared with other applications.

Serverless SQL pool is the T-SQL implementation of the lakehouse pattern. It enables your teams to share data stored in the data lake using SQL abstractions and T-SQL language that can be used by a large ecosystem of reporting tools that can display data to the business users. The serverless SQL pool in Azure Synapse represents a bridge between reporting tools and your data lake data.

Your data lake might be composed of millions of CSV and Parquet files, and they are perfect for scenarios where you ingest new data and analyze the entire data set. The problem with this is that the data that you store cannot be easily changed. This means they work best for selecting and viewing and may not provide the most up-to-date version for business intelligence and machine learning.

The teams that want to have both updateable data and flexibility to share data with analysts who use reporting tools such as Power BI may face a complex scenario. They might need to choose between ingesting data into data warehouses and keeping the data in the data lake. Data warehouses provide full updateability and access to data using the SQL language, but limit sharing with Spark and other tools. Data lakes enable easy sharing, but cannot be easily updated.

The solution to this is Delta Lake.

What is Delta Lake?

Delta lake format is one of the most widely used enhancements of the plain Parquet format. The Parquet files are read-only and enable you to append new data by adding new Parquet files into folders. Delta Lake is based on Parquet, so it provides excellent compression and analytic capabilities, but it also enables you to update, delete, merge data sets with ACID guarantees.

Why Delta Lake?

Delta Lake format enables you to update your data lake data without the need to permanently ingest it into database tables. Delta Lake is one of the most popular updateable big data formats in big data solutions and is frequently used by data engineers who need to prepare, clean, or update data stored in the data lake, or apply machine learning experiments. Updateable data formats provide ACID guarantees on write, error corrections, and time travel/versioning.

With the Delta Lake format, you are getting the best both from data warehouse and data lakehouse worlds – where data can now be easily shared and updated.

In the rest of this article, you will learn how serverless SQL pool enables you to implement T-SQL lakehouse pattern on Delta Lake files and connect your reporting and analytic ecosystem with the updateable lakehouse.

Unified Analytics

Traditionally, analytics projects are driven by competing needs. Companies have built multiple analytics projects, each one looking at different lines of business or various parts of a value chain. There are dataset sprawls that are difficult to consume and track, islands of machine-generated data are ingested, managed, and governed with an omnichannel experience. There lies the burden of data architecture.

Within the Microsoft cloud ecosystem, Azure Synapse Analytics helps remove that unnecessary burden and provides a unified experience to ingest, explore, prepare, manage, and serve data for immediate BI and machine learning needs. This gives organizations speed to insights and agility with their data, so they can truly engage with their customers in new, meaningful ways. It will empower employees with the real-time insights they need to drive business outcomes.

Breaking it down a bit more

Azure Synapse’s analytic runtimes are made up of SQL pool (dedicated and serverless), Apache Spark, and Data Explorer. We will focus today on the serverless SQL pool and Apache Spark pool where you will use to implement the data lakehouse pattern.

For data engineers, Azure Synapse natively runs Apache Spark at cloud-scale. You can use Spark pools to enable data engineering and machine learning workloads from your Delta Lake data sets. We will frequently see data being updated, refined, and enhanced through multiple layers (bronze, silver, gold).

Once your data engineering teams prepare data, they need to enable data analysts to use Power BI, Analysis Services, and other tools to query the data lake. For analysts who use tools that heavily rely on SQL, Azure Synapse has them covered with serverless SQL capabilities. The serverless pool represents a bridge between reporting tools and your data lake. With Delta Lake support in serverless SQL pool, your analysts can easily perform ad-hoc Delta Lake queries and show the results on the reports. This means business users can query Delta Lake files using SQL and no longer need to worry about managing compute, waiting for notebooks to start, or even think about manually pausing/resuming clusters. And you only pay for what you query.

How to query Delta Lake with SQL on Azure Synapse

As mentioned earlier, Azure Synapse has several compute pools for the evolving analytical workload. There is the Apache Spark pool for data engineers and serverless SQL pool for analysts. Let us break down how the two personas work together to query a shared Delta Lake.

Use Apache Spark for data preparation and transformation

Delta Lake content is updated using the Apache Spark pools in Synapse Analytics. Apache Spark pools in Azure Synapse will enable data engineers to modify their Delta Lake files using Scala, PySpark, and .NET. Azure Synapse notebooks are used to execute data engineering jobs and the Apache Spark pool brings auto-scaling capabilities to fit the demands of your analytical workloads.

If you are new to Spark pool in Azure Synapse, learn how to spin up your first Apache Spark pool here.

Once your data is prepared, cleaned, and ready for analysis, it is time to present the data to the business users using reporting tools.

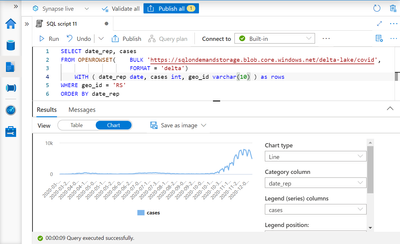

Using SQL to query your Delta Lake

With serverless SQL pool, analysts can use familiar T-SQL syntax to create views on the Delta Lake from Synapse Studio or SQL Server Management Studio (SSMS).

Business analysts can create self-service BI reports on the files created by the data engineers and derive insights from their Delta Lake, made visible with Power BI.

The reports can be easily created in Synapse Studio, Power BI, or any other reporting tool that can use SQL API. As you can see, there are many benefits to using Azure Synapse for data sharing. To summarize:

- Easy creation of reports on top of Delta Lake files without the need to copy, load, or transform data. It is the bridge between reporting tools and data.

- Connecting a large ecosystem of reporting and analysis tools with your data stored in Delta Lake format.

- Favorable pay-per-use consumption model where you don’t need to pre-provision resources. You are paying only for the queries that you are executing.

Next steps:

- Customers with qualifying subscription types can now try serverless SQL pool and Apache Spark pool resources in Azure Synapse using the limited-time free quantities offer. Sign in to your Azure Synapse Analytics account to get started.

- If you are new to Azure Synapse, check out our free hands-on training where we show you how to use Azure Synapse in 60 minutes. This six-part training series can be accessed on-demand here.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.