- Home

- Internet of Things (IoT)

- Internet of Things Blog

- Azure Percept DK : The one about AI Model possibilities

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Do you have great ideas for AI solutions, but lack knowledge of Data science, Machine Learning or how to build edge AI solutions? When Azure Percept was first released I was so excited to get it set up and start training models that I recorded a neat unboxing YouTube video that shows you how to do that in under 8 minutes. Right out of the box it could do some pretty cool stuff like object detection/image classification that could be used for things like people counting and object recognition and it even includes a progammable voice assistant!

Today I want to focus on what you get by way of models supported out of the box and what you can bring to the party.

1. Out-of-the-box AI Models:

The Azure Percept DK's azureeeyemodule supports a few AI models out of the box. The default model that runs is Single Shot Detector (SSD), trained for general object detection on the COCO dataset. Azure Percept Studiom also contains sample models for the following applications:

- people detection

- vehicle detection

- general object detection

- products-on-shelf detection

With pre-trained models, no coding or training data collection is required, simply deploy your desired model to your Azure Percept DK from the portal and open your devkit’s video stream to see the model inferencing in action.

2. Other officially supported models:

Not all models are found inside the Azure Percept Studio. Here are the links for the models that we officially guarantee (because we host them and test them on every release). Below are some of the fun ones you may want to try out:

-

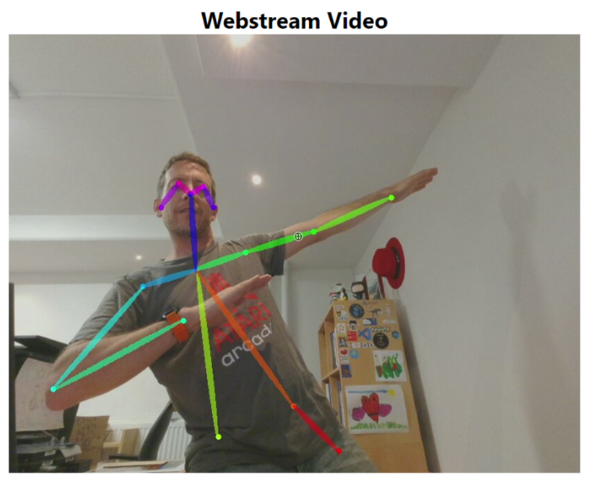

OpenPose - multi-person 2D pose estimation network (based on the OpenPose approach)

-

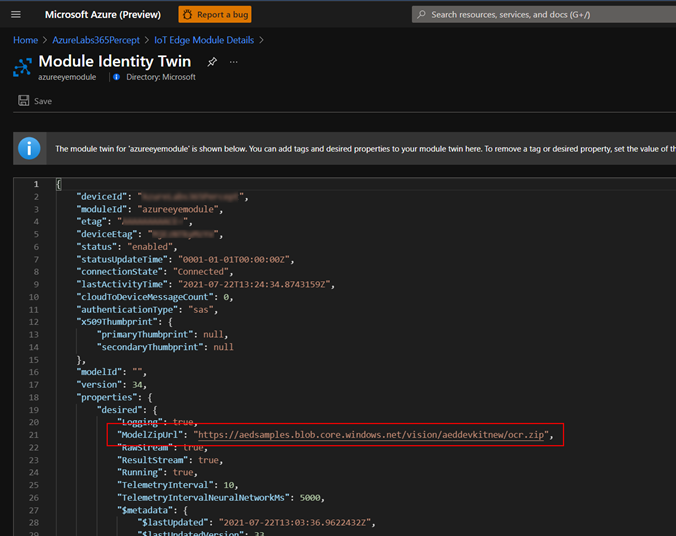

OCR - Text detector based on PixelLink architecture with MobileNetV2

You will notice that many come from our collaboration with Intel and their Open Model Zoo through support for the OpenVINO™ toolkit. We’ve made it really easy to use these models, since all you have to do is paste the URLs into your IOT Hub Module Identity Twin associated with your Percept Device as the value for "ModelZipUrl" or download them and host them in say something like container storage and point to that.

If you are worried about the security of your AI model, Azure Percept provides protection at rest and in transit, so check out Protecting your AI model and sensor data.

3. Build and train your own custom vision model:

Azure Percept Studio enables you to build and deploy your own custom computer vision solutions with no coding required. This typically involves the following:

- Create a vision project in Azure Percept Studio

- Collect training images with your devkit

- Label your training images in Custom Vision

- Train your custom object detection or classification model

- Deploy your model to your devkit

- Improve your model by setting up retraining

A full article detailing all the steps you need to train a model so you can do something like sign language recognition. I did in fact record a video a while back that shows you not only how to do this, but also transcribe it into text using PowerApps all with no-code.

4. Bring your own model:

If you do have data science skills and/or you have already built out your own solution, trained your model and want to deploy it on Azure Percept, that too is possible. There is a great tutorial/Lab that will take you though some Jupyter Notebooks to capture images, label and train your model and package and deploy it to Azure Percept without using the Azure Percept Studio or custom vision projects. This material leverages the Azure Machine Learning Service we have developed to do the heavy lifting for compute, labelling and training but for the best part mostly orchestrated right out of the Jupyter notebook.

There is another tutorial that will walk you through the banana U-Net semantic segmentation notebook, which will train a semantic segmentation model from scratch using a small dataset of bananas, and then convert the resulting ONNX model to OpenVINO IR, then to OpenVINO Myriad X .blob format, and finally deploy the model to the device.

Conclusion/Resources:

Hopefully it is now clear what the various options are for getting your AI Model from silicon-to-service, the way you want, with the speed and agility the Azure Percept DK offers. Whether you are a hobbyist, developer or data scientist, the Azure Percept DK offers a way to get your ideas into production faster, leveraging the power of the cloud, AI and the edge. Here are some resources to get you started:

- Purchase Azure Percept

- Architecture and Technology

- Technical Overview of Azure Percept with Microsoft Mechanics by George Moore -

Build & Deploy to edge AI devices in minutes - Azure Percept enables simple AI and computing on the edge

- Industry Use Cases and Community Projects:

- Azure Percept showing Edge Computing and AI in the Agriculture Summit keynote by Jason Zander - YouT...

- Set up your own end-to-end package delivery monitoring AI application on the edge with Azure Percept

- Live simulation of Azure Percept from Alaska Airlines: Azure Percept dev kit to detect airplanes and...

- BlueGranite's Smart City Solution

- Perceptmobile: Azure Percept Obstacle Avoidance LEGO Car

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.